Artificial Intelligence (AI) is no longer experimental — it’s operational. From underwriting in finance to personalized marketing in retail, AI models are driving decisions that directly affect customers, employees, and outcomes at scale.

As AI continues to reshape enterprise workflows, boost efficiency, and unlock innovation, the spotlight on AI-related risk is intensifying.

However, the transformative power of AI comes with a new class of risks that traditional GRC (Governance, Risk, and Compliance) frameworks were not designed to address. These include algorithmic bias, model drift, explainability failures, misuse of personal data, and rapidly evolving global regulatory obligations. Unlike conventional IT or cyber risks, AI risks are dynamic, opaque, and increasingly reputational.

This means GRC leaders and risk officers can no longer rely solely on legacy dashboards or ad hoc processes to manage emerging AI threats.

To govern AI responsibly and proactively, enterprises must embed AI risk managementdirectly into their GRC strategy — and the linchpin of that integration is a fit-for-purpose AI GRC Dashboard.

In this blog post, we’ll guide you through how to design, deploy, and optimize a GRC dashboard tailored specifically for AI risk oversight.

From tracking fairness metrics and compliance status to auditing model changes and forecasting future risks, we’ll outline:

Why enterprises need dedicated AI risk dashboards

The five core functions every AI GRC dashboard must fulfill

Practical examples of role-based dashboards for executives, developers, and GRC teams

Integration tips to connect the dashboard to your MLOps and compliance ecosystem

Design best practices and automation strategies to scale governance

Visionary features to future-proof your AI risk oversight

Whether you're building your AI governance capability from the ground up or enhancing your existing GRC program, this guide will help you align responsible AI practices with real-time visibility, accountability, and control.

Let’s dive into why AI requires a new approach — and how a strategic GRC dashboard makes that transformation possible.

Why Enterprises Need an AI Risk Dashboard

AI systems bring a new set of risks that differ significantly from traditional IT or cyber risks.

These include:

Algorithmic bias and fairness concerns

Model drift and decay over time

Data privacy and misuse of personal data

Lack of explainability and transparency

Unclear accountability and governance roles

Regulatory non-compliance (e.g., EU AI Act, GDPR, NIST AI RMF)

Given these factors, enterprises must treat AI as a distinct risk domain and integrate it into enterprise risk management processes using tailored visibility tools — such as a GRC dashboard.

🎯 Core Functions of an AI Risk GRC Dashboard

An effective AI Risk GRC Dashboard is not just a data visualization tool — it's an enterprise control mechanism for managing the governance, risk, and compliance lifecycle of artificial intelligence systems.

Here’s a deeper dive into the five core functions every AI-focused GRC dashboard should enable:

✅ 1. Centralized Visibility

What it does:

Aggregates and presents a single-pane-of-glass view into all AI-related assets, risks, controls, and compliance activities across the enterprise.

Why it matters:

Without centralization, AI risk data remains siloed across teams (data science, security, compliance), making oversight and accountability nearly impossible.

Key Capabilities:

AI system inventory (models in development, testing, production)

Central risk register linked to AI initiatives

Control coverage by model/system

Data flow maps and third-party integrations (e.g., OpenAI, AWS)

Example View:

An interactive heatmap showing all AI models by department, risk level, and current governance status (e.g., “Approved,” “Pending DPIA,” “Failed Fairness Audit”).

⚠️ 2. Real-Time Risk Monitoring

What it does:

Continuously tracks and surfaces emerging AI risks using automated feeds from MLOps platforms, model performance tools, and threat monitoring systems.

Why it matters:

AI risks (e.g., bias, drift, unauthorized retraining) can emerge rapidly. A real-time GRC dashboard turns these dynamic events into instant, trackable governance actions.

Key Triggers to Monitor:

Model performance drift or decay

Deployment of models without proper approval or testing

Anomalous outputs or flagged decisions

Security vulnerabilities in AI APIs or inference layers

Automation Ideas:

Trigger alerts in ServiceNow or Slack when fairness thresholds are violated

Initiate automated risk reviews when a model enters production without documented explainability

📋 3. Compliance Tracking

What it does:

Maps AI systems against a variety of regulatory and internal compliance frameworks, identifying gaps and providing remediation paths.

Why it matters:

The AI regulatory landscape is expanding rapidly (e.g., EU AI Act, NIST AI RMF, ISO/IEC 42001). Enterprises must demonstrate continuous compliance, not just point-in-time readiness.

Compliance Frameworks to Integrate:

🏛 EU AI Act: Assign risk levels (Minimal, Limited, High, Prohibited) to each model and track required safeguards.

🛡 NIST AI RMF: Map models to core functions: Govern, Map, Measure, and Manage.

📊 ISO/IEC 42001: Implement AI-specific control objectives and document AI management system processes.

🧭 Internal AI Ethics Policy: Monitor adherence to organizational AI principles (fairness, transparency, accountability).

Dashboard Output:

Compliance scorecards per model, department, or region — complete with pass/fail indicators and mitigation timelines.

🧾 4. Incident and Model Audit Logs

What it does:

Captures a full audit trail of AI lifecycle activities — from model creation to updates, risk reviews, and deployment changes — ensuring transparency and accountability.

Why it matters:

Auditability is a core requirement of most AI risk frameworks. In regulated sectors (finance, healthcare, defense), forensic-level traceability is essential to demonstrate due diligence.

What to Log:

Model version history and training data lineage

Change approvals and retraining decisions

Fairness and security test results

Risk escalations and remediation steps

Role-based model access activity

Visual Output:

Timeline view of a model’s lifecycle, color-coded by status (e.g., “Reviewed,” “Pending Review,” “Flagged for Bias”) with clickable access to documents and controls.

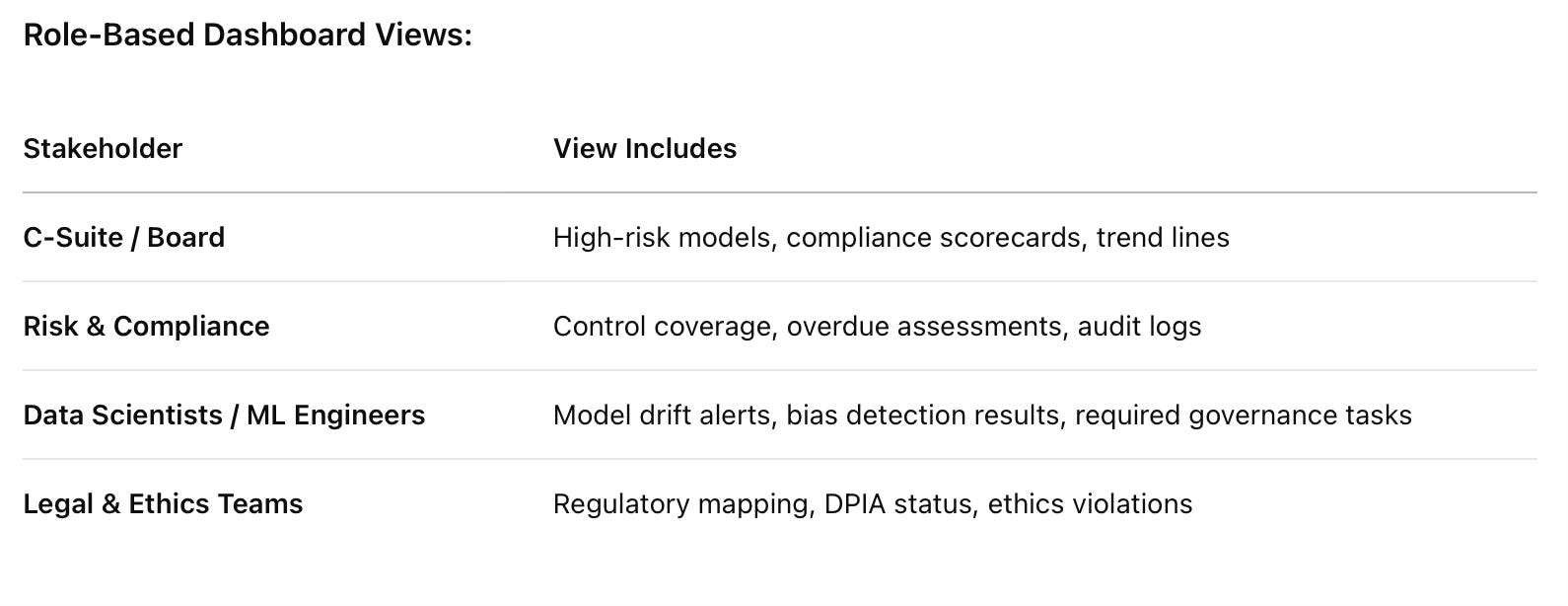

👥 5. Stakeholder Reporting

What it does:

Delivers role-based dashboard views tailored to the needs of different stakeholders, from board-level summaries to technical deep-dives for engineers and analysts.

Why it matters:

Not all users need the same level of detail. Executives need strategic insights; compliance teams need control status; data scientists need technical risk indicators.

Bonus Feature:

Enable export to PDF or automated email summaries to key stakeholders on a weekly/monthly basis.

Each of these five functions isn’t just a dashboard feature — it's a pillar of responsible AI governance. When woven together through strong integrations and automation, your AI GRC dashboard becomes a living system that doesn’t just observe risk — it helps your organization control it, justify it, and evolve with it.

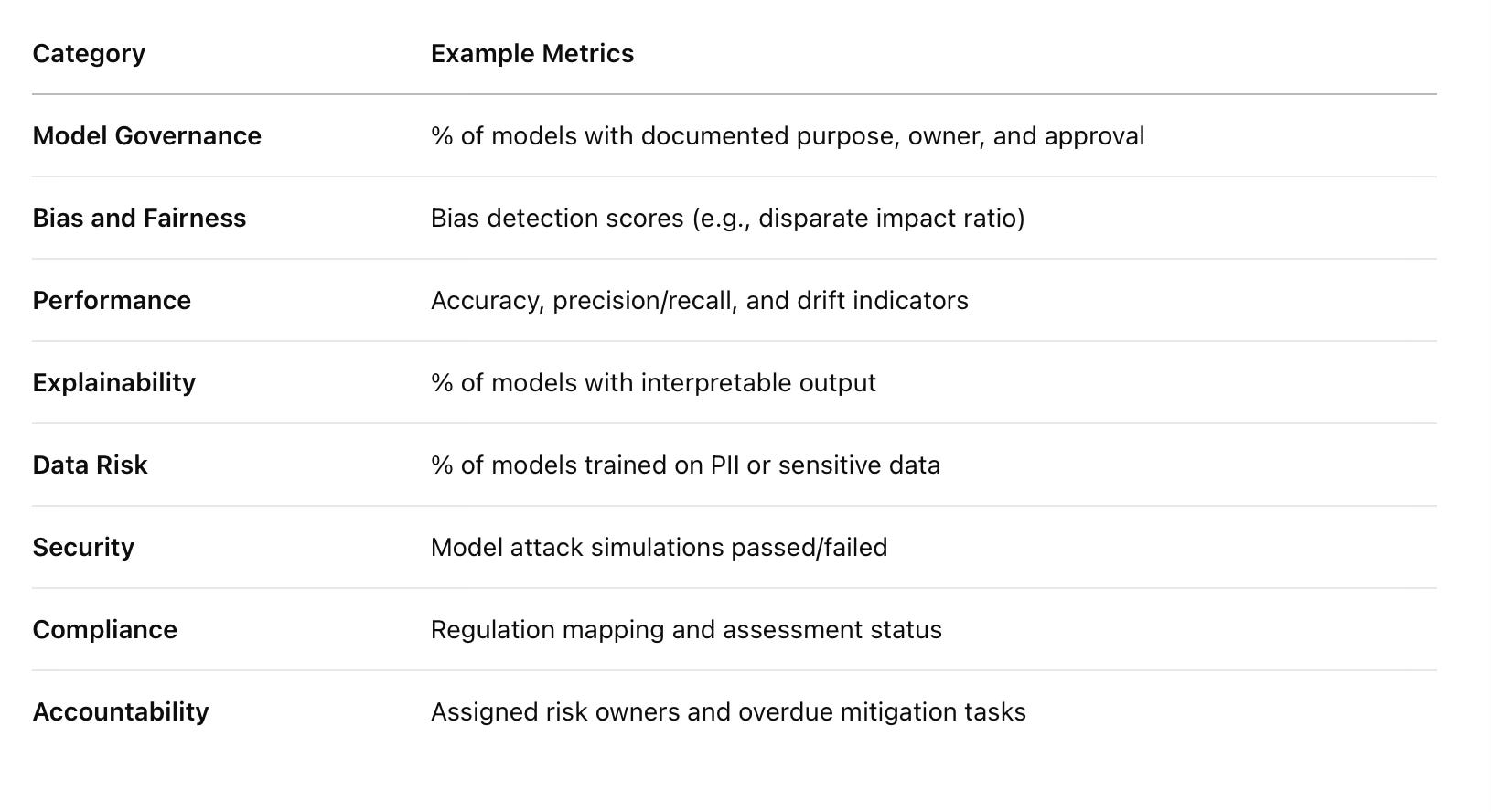

Key Metrics to Include in an AI Risk Dashboard

To be actionable, a GRC dashboard must track both quantitative and qualitative metrics.

Examples include:

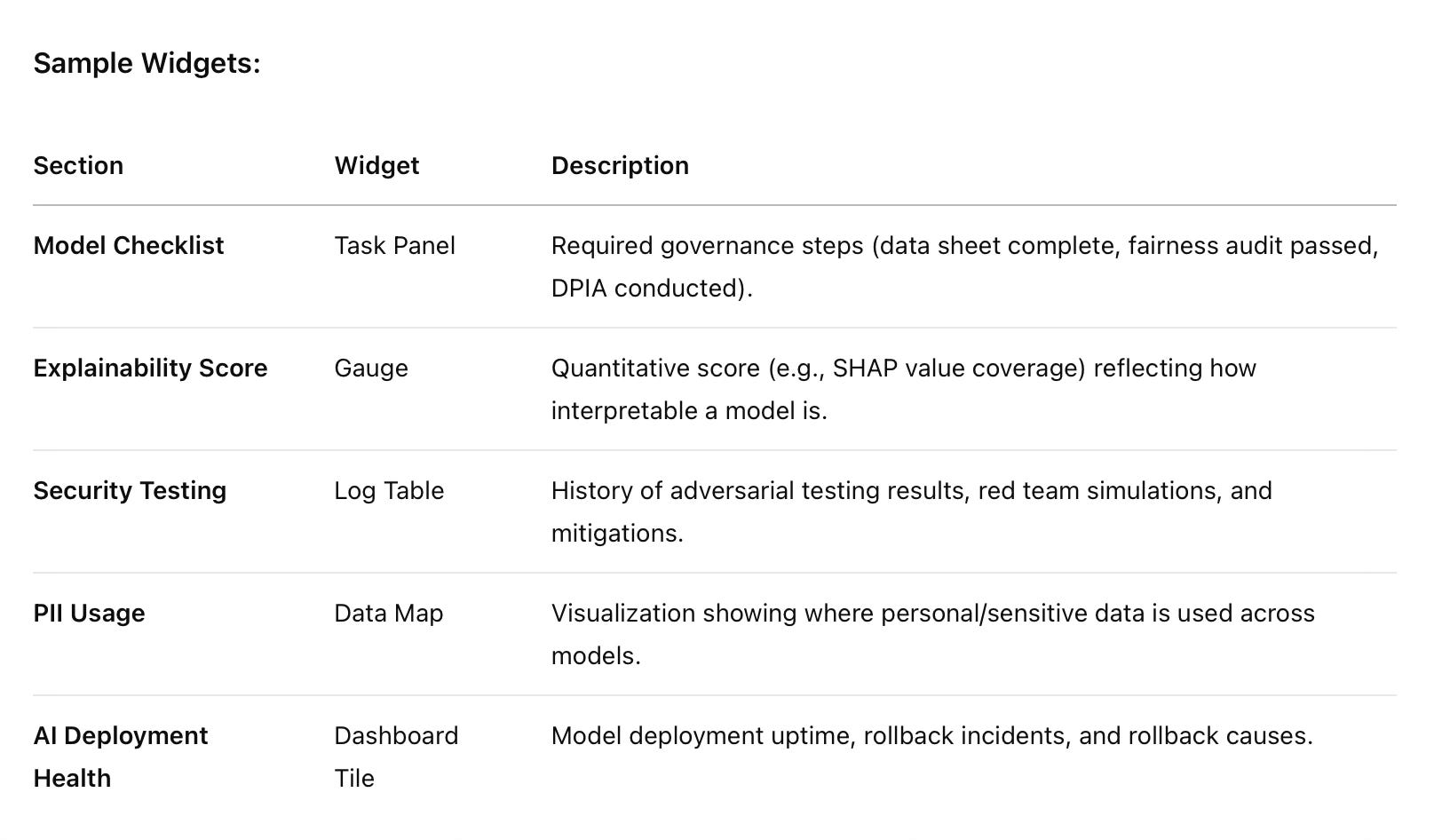

These metrics allow organizations to move from reactive to proactive AI risk management.

Design Best Practices for AI Risk Dashboards

To create an impactful GRC dashboard for AI, enterprises should follow these best practices:

✅ Modular Structure

Use modular components (tiles, graphs, gauges) that allow users to drill down from high-level summaries to technical detail.

✅ Role-Based Views

Design different dashboards or access levels for compliance, risk, IT, and AI teams to avoid overwhelming users with irrelevant data.

✅ Integration with Existing GRC Tools

Embed the dashboard into your existing GRC platforms (e.g., Archer, ServiceNow, MetricStream) to maintain consistency in workflows and controls.

✅ Automated Data Feeds

Leverage APIs and integrations to continuously pull data from ML Ops platforms (e.g., MLflow, SageMaker, Azure ML), issue trackers, and compliance tools.

✅ Alerting and Workflows

Incorporate workflows for escalating AI risks, triggering assessments, and documenting mitigations directly from the dashboard interface.

Challenges and Considerations

While building an AI-focused GRC dashboard is essential, it's not without challenges:

Data availability: AI risk data may be fragmented across multiple tools and teams.

Lack of standards: AI risk scoring is still an emerging practice with evolving benchmarks.

Cultural change: Embedding GRC into the AI/ML lifecycle requires alignment between data scientists and risk teams.

Overcoming these challenges involves collaboration, standardization, and executive sponsorship.

AI Risk GRC Dashboard Examples for Enterprise Stakeholders

Below are three example GRC dashboard mockups designed specifically for tracking AI-related risks in enterprise environments.

Each dashboard serves a specific stakeholder group and contains practical, actionable widgets and metrics that align with GRC principles and AI governance frameworks like NIST AI RMF and ISO/IEC 42001.

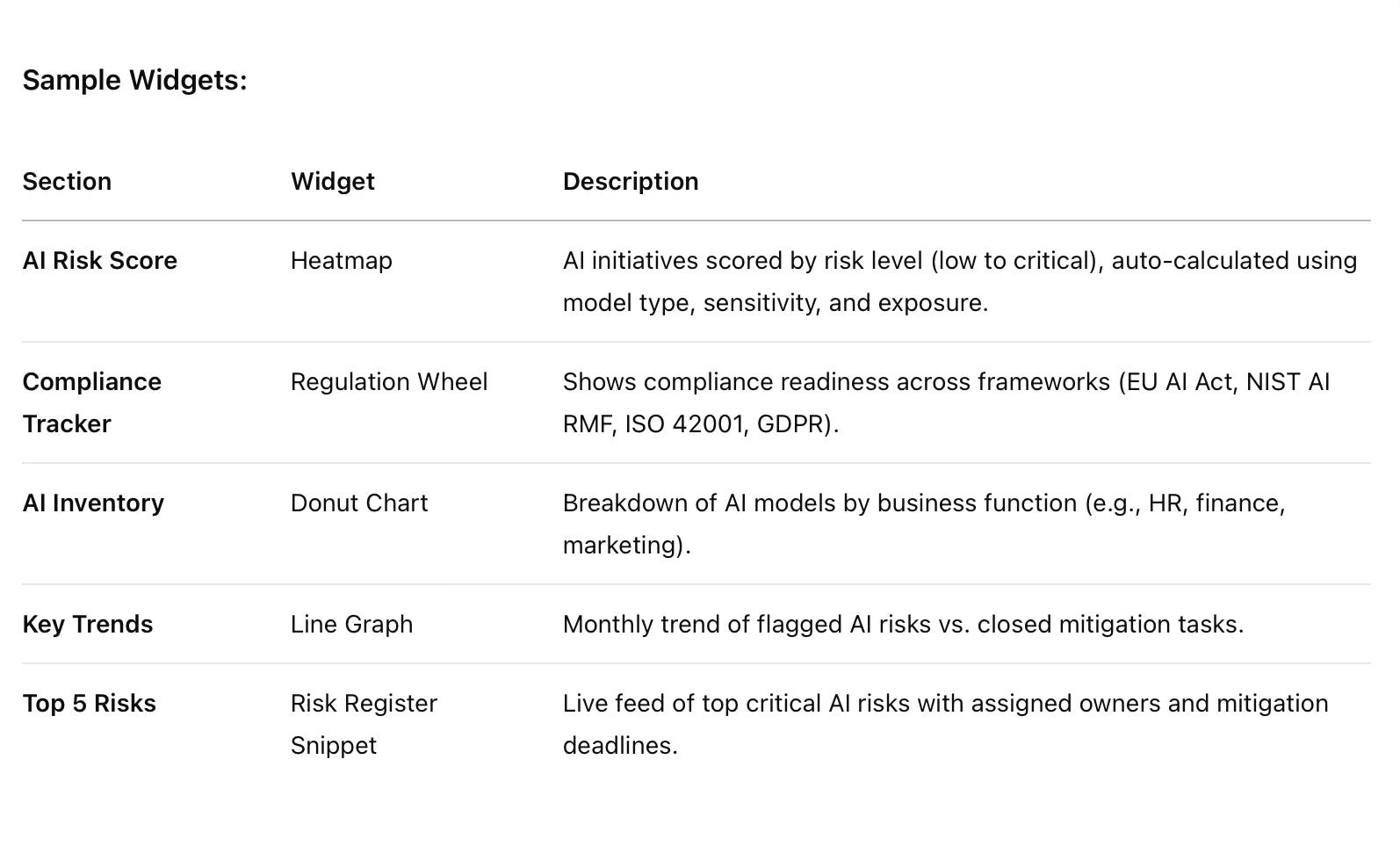

🧠 1. AI Risk Executive Overview Dashboard

Audience: CIO, CISO, Chief Risk Officer, Board

Purpose: Provide a high-level view of AI risks across the organization with clear KPIs and trend indicators.

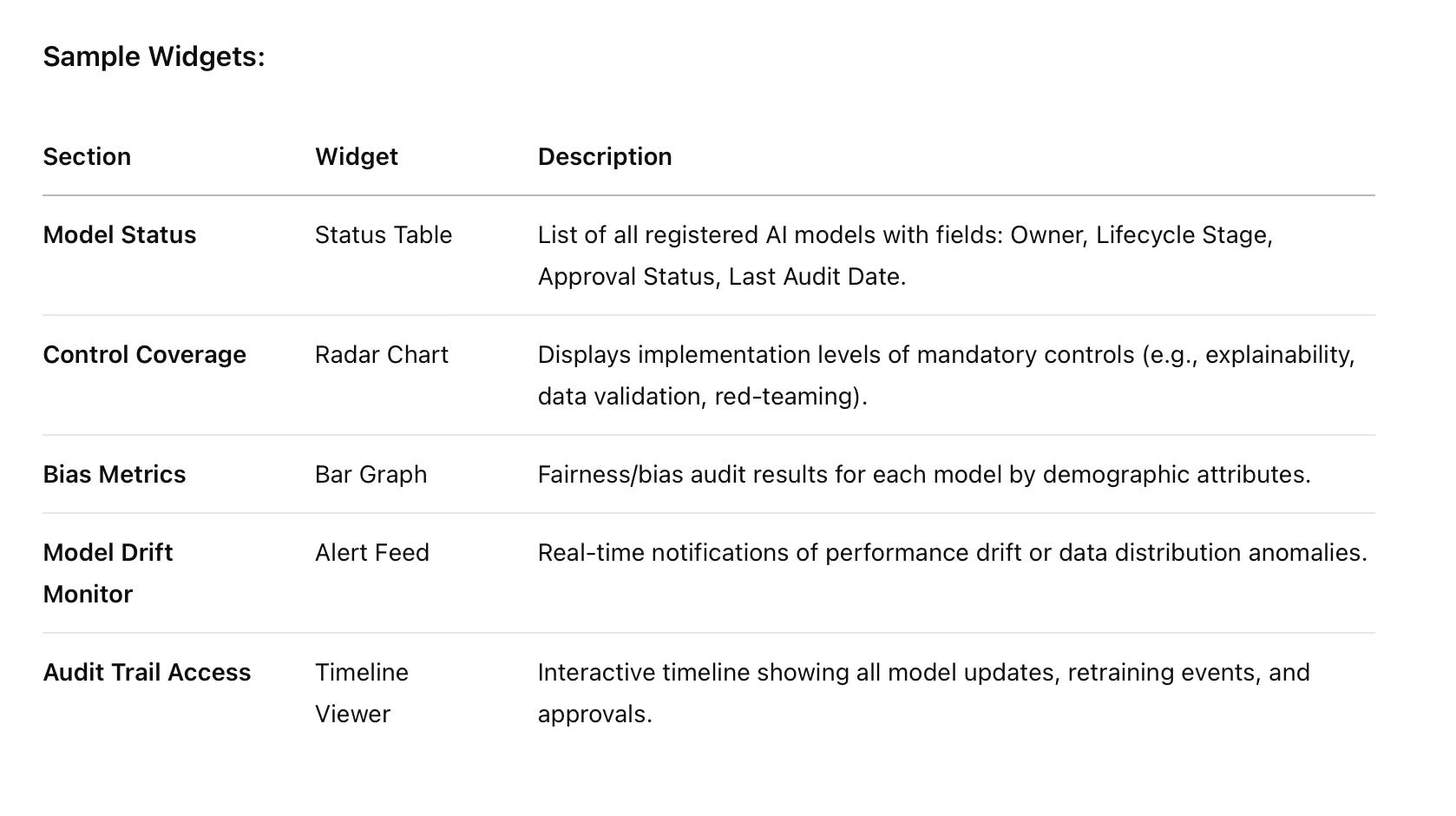

🛠️ 2. Operational AI Governance Dashboard

Audience: AI Governance Officers, Risk Analysts, GRC Managers

Purpose: Monitor risk indicators and control effectiveness across the AI model lifecycle.

👨💻 3. AI Developer / ML Ops Dashboard (Risk-Embedded Dev View)

Audience: Data Scientists, ML Engineers, DevSecOps

Purpose: Integrate GRC requirements directly into AI/ML workflows.

📌 Dashboard Integration Notes:

Designing a future-ready GRC dashboard for AI risk isn’t just about the front-end visuals — it’s about creating seamless back-end integrations, ensuring data reliability, and automating governance workflows across the enterprise.

Here’s how to make sure your AI GRC dashboard isn’t just insightful — but operationally embedded and always up-to-date.

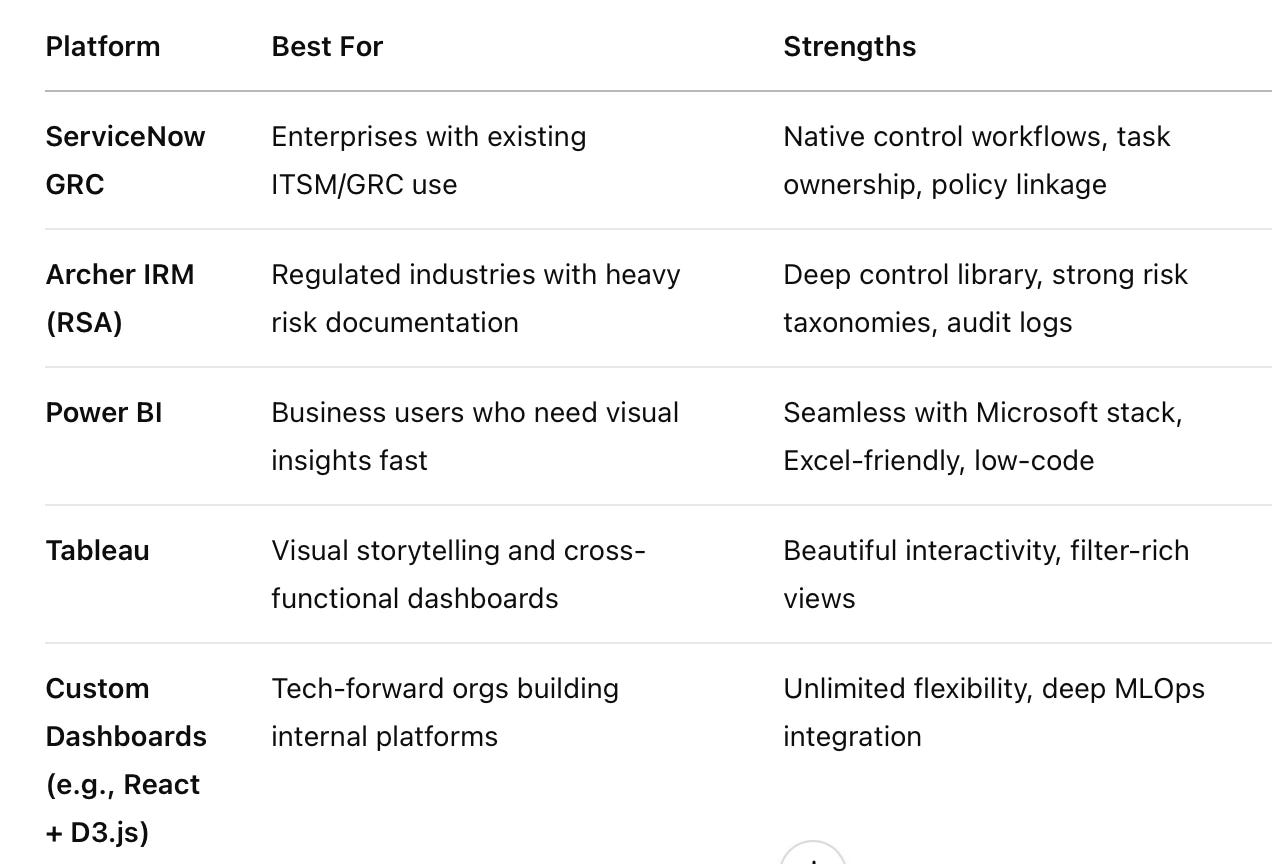

🧩 Platform Recommendations – What to Build With (and When)

Your choice of dashboard platform should align with your organization's existing ecosystem, GRC maturity, and integration needs.

Below are top platform choices and what they’re best suited for:

🛠️ Strategic Advice:

Choose a platform that not only supports traditional GRC data but can ingest live AI model metadata and risk signals from engineering pipelines.

If you already use ServiceNow GRC for policy management or Jira for development, your dashboard must plug into those tools directly.

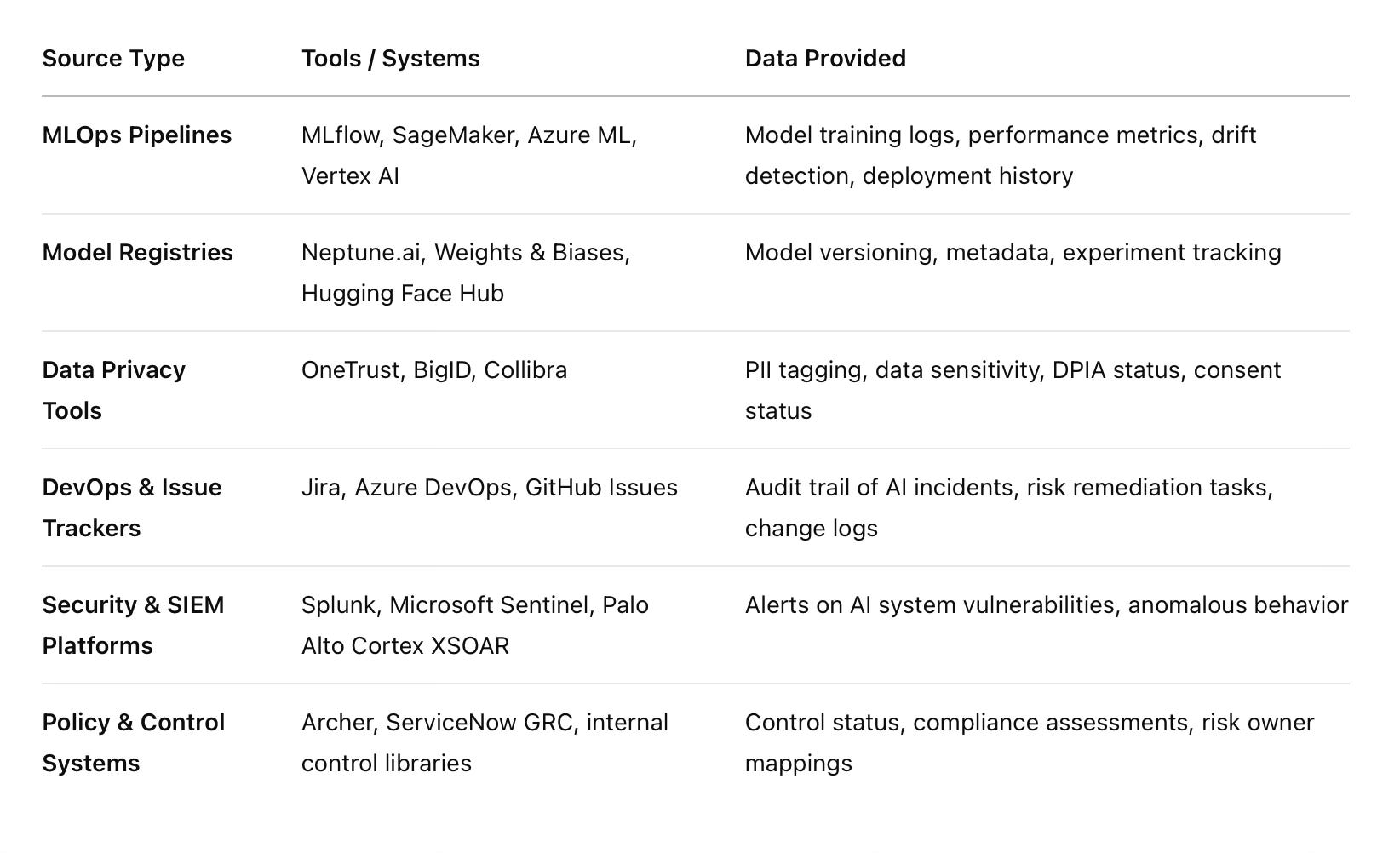

🔄 Data Feeds – What to Connect for Real-Time Insight

A strong AI GRC dashboard thrives on continuous data ingestion from diverse sources across your organization.

Here's a breakdown of essential data feeds:

📊 Integration Tip:

Establish ETL (Extract, Transform, Load) pipelines or use low-code integration tools (e.g., Workato, Zapier, MuleSoft) to regularly sync these systems with your dashboard.

🤖 Automation Tip – Making Your GRC Dashboard Truly Intelligent

Manual updates kill dashboards — especially in fast-paced AI environments.

Automate wherever possible using APIs, webhook triggers, and CI/CD pipelines.

Here’s how:

🔗 API-Driven Data Sync

Use REST APIs to pull metadata from MLflow, SageMaker, or Azure ML every hour.

Sync control statuses from ServiceNow GRC or Archer via scheduled queries.

🔄 CI/CD Workflow Integration

Automatically update model lifecycle status in your dashboard when a new model is deployed via GitHub Actions or Azure DevOps pipelines.

Trigger risk reassessments or alert flags if automated model performance monitoring detects drift or fairness issues.

📥 Notification and Workflow Triggers

Use ServiceNow workflows or Slack alerts to notify control owners when thresholds are breached (e.g., fairness below acceptable range).

Assign mitigation tasks automatically to risk owners based on predefined rule sets.

💡 Pro Insight:

Tie these automations into your organization’s GRC control objectives to ensure that technical events translate into governance actions — not just visibility.

🔧 Real-World Example: End-to-End AI Risk Monitoring Flow

Model retraining occurs in Azure ML.

Telemetry is pushed via API to Power BI dashboard: performance shift detected.

Dashboard flags "Model Drift Detected – Action Required."

ServiceNow auto-creates a GRC task: “Initiate AI Risk Review – Model X.”

Control owner receives alert, completes review, and updates risk status.

Dashboard updates control compliance to “Mitigated,” and executive view reflects resolved state.

🧠 Takeaway

Your AI GRC dashboard isn’t just a reporting layer — it’s the nerve center of risk-based decision-making.

To make it scalable and reliable:

Choose a platform that works with your tech stack and GRC tools.

Build rich integrations with your MLOps, data governance, and ticketing systems.

Automate everything that can be automated — from model alerts to control updates.

If you build your dashboard right, it won’t just report risk — it will help manage it.

🚀 Future-Ready AI GRC Dashboard Ideas

As AI matures in enterprise settings, traditional risk indicators (like model accuracy or control checklists) won't be enough.

Tomorrow’s GRC dashboards will need to provide predictive, real-time, and cross-regulatory insights.

Here are three forward-looking dashboard widgets that leading enterprises are beginning to explore:

🔮 1. AI Risk Forecasting Widget

What it is:

A predictive tool that uses historical risk event data (e.g., model drift, fairness violations, deployment errors) combined with metadata on model changes (e.g., retraining, new datasets, new algorithms) to forecast where and when risks are most likely to occur next.

Why it matters:

Proactive risk management is better than reactive containment. With predictive modeling, GRC teams can prioritize audits, assessments, and controls before issues emerge.

Example Use Case:

If a model has been retrained twice in the past quarter and is showing early drift, the dashboard flags it as “High Likelihood of Performance Degradation – Next 30 Days.”

Uses ML algorithms (yes, GRC using AI!) to detect patterns in risk emergence.

Bonus Integration:

Ingest risk incident logs from ServiceNow, model telemetry from MLflow, and changes from GitHub to fuel the forecast engine.

🧭 2. AI Ethics Radar

What it is:

A real-time radar-style widget that aggregates internal and external sentiment related to your organization’s AI use cases.

This could include:

Internal employee feedback

End-user complaints or concerns

Media sentiment analysis

Stakeholder surveys or ESG input

Regulatory scrutiny level by geography or sector

Why it matters:

Ethical risk is often soft-signaled before it becomes a headline or lawsuit. The Ethics Radar gives visibility into the perception and acceptability of AI systems—not just technical compliance.

Example Use Case:

A model used in HR for candidate screening is flagged due to rising employee concern captured through internal feedback tools like OfficeVibe or Qualtrics.

Another use case: a credit risk model gets negative coverage in the press, triggering a “Reputation Risk: High” tag on the dashboard.

Bonus Integration:

Connect to tools like Brandwatch, Hootsuite, internal survey platforms, or integrate custom sentiment APIs (ChatGPT, anyone?) for ongoing pulse checks.

🌍 3. Interactive Regulation Mapper

What it is:

A visual map that links each AI model in the organization to applicable global regulations and standards — and shows pass/fail status for each requirement.

Think of it like a live compliance heatmap for every AI use case.

Why it matters:

AI regulations are emerging fast — EU AI Act, US Executive Order on AI, Singapore’s AI Verify, Brazil’s PL 21/2020, and more.

Organizations need a way to map models to the right frameworks and prove compliance dynamically.

Example Use Case:

A high-risk generative AI model used for customer interactions is mapped to EU AI Act – High-Risk Categoryand shows:

✅ DPIA Complete

❌ Human Oversight Control Not Implemented

✅ Logging and Traceability Enabled

Hovering over the failed control gives remediation steps and assigns ownership.

Bonus Integration:

Connect with compliance registers (e.g., OneTrust, TrustArc), internal control systems, or manual tagging systems in ServiceNow/Archer for tracking remediation workflows.

🧠 Why These Matter for Strategic GRC

All three of these widgets elevate GRC from a checkbox exercise to a strategic command center for AI risk.

They:

Enable early warning and forecasting

Give real-time social and reputational visibility

Ensure legal and regulatory adaptability

Break down silos between Legal, Compliance, AI, and Ethics teams

Ready to Take Action?

If you're building out your enterprise's AI GRC capabilities, ask yourself:

Does your current dashboard help you predict risk, or just track it?

Are ethical concerns and public perception reflected in your governance tooling?

Can you map compliance obligations to models with a few clicks?

If not, these future-ready features should be on your roadmap — or better yet, your next sprint.

Conclusion

AI presents one of the greatest opportunities — and challenges — of our time. Enterprises that invest in robust, data-driven GRC dashboards tailored for AI risk will not only comply with evolving regulations but also earn stakeholder trust, protect reputational value, and ensure ethical AI usage.

Building a next-generation GRC dashboard for AI isn't just about oversight — it's about strategic foresight.

At GRC PROS, we provide thought-provoking content on cutting-edge industry practices, robust frameworks, and real-world business cases to enhance your GRC knowledge.